- Introduction to Model Deployment and Serving

- TensorFlow Serving: Flexible, High-Performance Serving System

- ONNX: Open Neural Network Exchange

- Seldon: Scalable Model Deployment on Kubernetes

- Comparative Analysis of TensorFlow Serving, ONNX, and Seldon

- Best Practices for Model Deployment

- Case Studies and Real-World Applications

- Conclusion and Future Directions

Introduction to Model Deployment and Serving

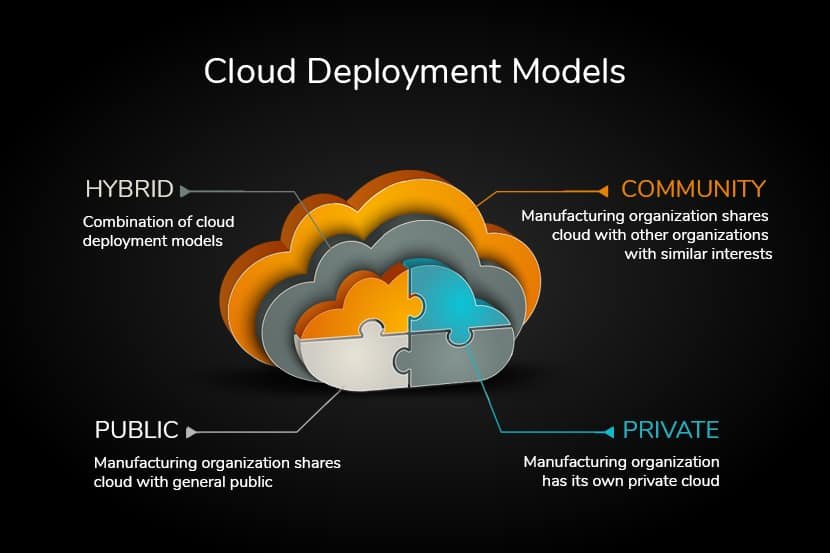

In the rapidly evolving field of machine learning, model deployment and serving play a critical role in transforming trained models into actionable insights. Deploying models into production environments is essential for enabling real-time predictions, which drive decision-making processes across various industries. Whether it is predicting customer churn, recommending products, or detecting fraudulent activities, the ability to serve machine learning models efficiently and reliably is paramount.

Model deployment involves taking a trained machine learning model and making it accessible to applications through an API or other interfaces. This process ensures that the model can handle incoming data, make predictions, and return results in a timely manner. However, deploying models into production is not without its challenges. Issues such as scalability, latency, model versioning, and monitoring must be addressed to ensure optimal performance and reliability.

Serving a model effectively means that the deployed model must be able to handle varying loads, provide low-latency responses, and seamlessly integrate with existing systems. It also requires robust monitoring and logging to ensure that the model performs as expected over time and under different conditions. As the landscape of model deployment and serving evolves, various tools and frameworks have emerged to address these challenges.

This comprehensive guide will delve into three key tools that have gained prominence in the realm of model deployment and serving: TensorFlow Serving, ONNX, and Seldon. Each of these tools offers unique features and capabilities that cater to different deployment needs and environments. By exploring their functionalities and use cases, we aim to provide a detailed understanding of how they can be leveraged to deploy and serve machine learning models effectively.

As we navigate through the intricacies of TensorFlow Serving, ONNX, and Seldon, we will highlight their strengths, limitations, and best practices. This will equip practitioners with the knowledge needed to make informed decisions when selecting the appropriate tool for their specific deployment requirements.“`html

TensorFlow Serving: Flexible, High-Performance Serving System

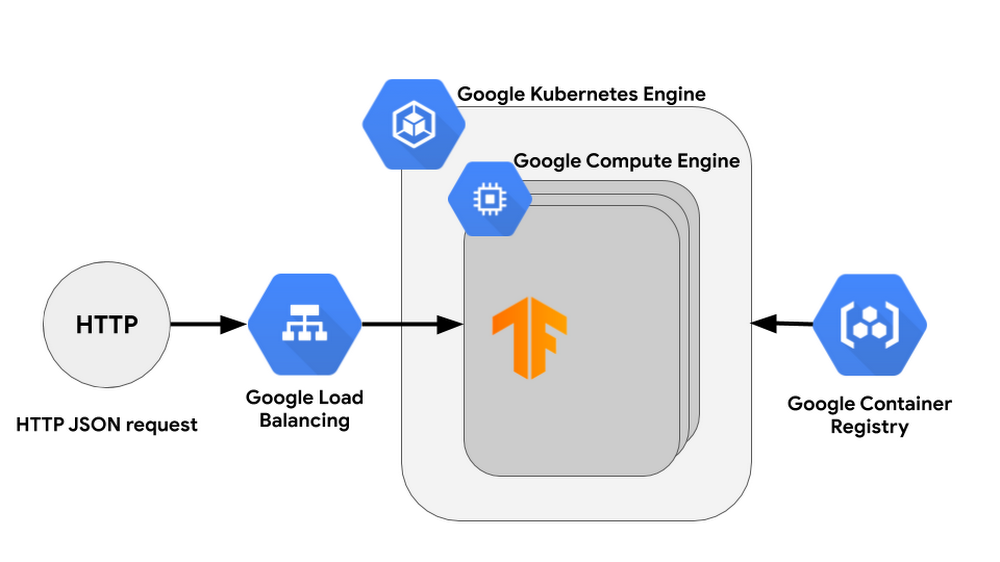

TensorFlow Serving is a robust, high-performance system designed specifically for serving machine learning models in production environments. Developed by the TensorFlow team, it offers a flexible and extensible architecture that supports a variety of models, including TensorFlow models and other types of machine learning frameworks. This flexibility makes it an ideal choice for organizations looking to deploy models into production efficiently and scalably.

The architecture of TensorFlow Serving is modular, which facilitates the integration of new algorithms and model types without significant changes to the core system. At its heart, TensorFlow Serving uses a model server that handles the loading and serving of models. This server is designed to handle multiple versions of a model simultaneously, allowing for seamless updates and rollbacks. This versioning capability is crucial for maintaining model integrity and ensuring consistent performance.

One of the key features of TensorFlow Serving is its ability to manage model lifecycle, including loading, serving, and unloading models. This lifecycle management is achieved through a configuration file that specifies the model base path, the specific versions to serve, and other relevant parameters. The system can be configured to automatically detect new model versions in the base path, making the deployment process more streamlined.

Monitoring and logging are also integral components of TensorFlow Serving. The system provides detailed metrics that can be monitored to ensure models are performing as expected. These metrics include request counts, error rates, and latency, which can be visualized using standard tools like Prometheus and Grafana. This monitoring capability helps in diagnosing issues and optimizing model performance in real-time.

To set up TensorFlow Serving, start by installing the TensorFlow Serving package. The next step involves configuring the server using a configuration file that specifies the model base path and other parameters. Once configured, the server can be started, and it will begin serving the specified models. Model versioning is managed through the directory structure in the model base path, allowing for easy updates and rollbacks. Monitoring tools can then be integrated to keep track of model performance.

For more detailed instructions and resources, visit the official TensorFlow Serving website. Here, you will find comprehensive guides, documentation, and best practices to help you get the most out of TensorFlow Serving.“`

ONNX: Open Neural Network Exchange

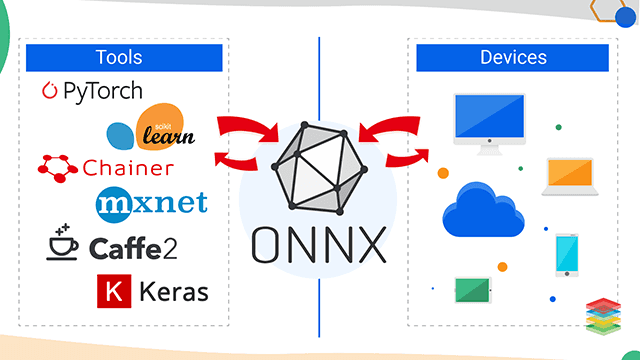

Open Neural Network Exchange (ONNX) is a widely recognized open format designed to represent machine learning models. It was developed through a collaborative effort by prominent technology corporations including Microsoft and Facebook. The primary objective of ONNX is to facilitate interoperability and flexibility in the machine learning landscape. By providing a unified framework, it enables seamless transfer of models between various machine learning frameworks such as PyTorch, TensorFlow, and many others.

The utilization of ONNX offers numerous advantages, paramount among them being the ease of transferring models across different platforms without the need for extensive rework. This ensures that developers can leverage the best features of multiple frameworks, optimizing both the training and deployment processes. For instance, a model trained in PyTorch can be converted to ONNX format and then deployed using TensorFlow Serving, thereby combining the strengths of both frameworks.

Converting models to ONNX format is a straightforward process. Typically, most machine learning frameworks provide built-in functions or libraries to export models to ONNX. For example, in PyTorch, the conversion can be accomplished using the `torch.onnx.export` function. Once the model is in ONNX format, it can be imported into other frameworks that support ONNX, ensuring a seamless workflow.

Deploying ONNX models is equally versatile. Various runtime environments and deployment tools support ONNX models, making it easier to serve them in production. ONNX Runtime, for instance, is an optimized engine that executes ONNX models with high performance on different hardware platforms. This flexibility allows developers to choose the most suitable deployment strategy, whether it be cloud-based, on-premises, or edge deployments.

For those seeking additional information and resources, the official ONNX website (onnx.ai) offers comprehensive documentation, tutorials, and community support. By embracing ONNX, organizations can significantly enhance the interoperability and efficiency of their machine learning workflows, ensuring that they remain agile and adaptable in an ever-evolving technological landscape.

Seldon: Scalable Model Deployment on Kubernetes

Seldon is an open-source platform designed to streamline the deployment, scaling, and management of machine learning models on Kubernetes. Its robust framework enables data scientists and engineers to efficiently operationalize their models within a Kubernetes environment, leveraging the platform’s inherent scalability and resilience.

One of the key features of Seldon is its flexibility in supporting a wide range of machine learning frameworks, including TensorFlow, PyTorch, and scikit-learn, among others. This versatility ensures that organizations can incorporate Seldon into their existing workflows without the need to modify their models extensively.

The architecture of Seldon is built around Kubernetes’ microservices paradigm, which allows for modular, scalable, and maintainable model deployments. Each model is wrapped in a Docker container, facilitating seamless integration and deployment across various Kubernetes clusters. Seldon’s architecture also includes components for logging, monitoring, and canary deployments, providing comprehensive support for production-grade model serving.

To deploy a model using Seldon, one must first install Seldon Core on their Kubernetes cluster. This can be accomplished using Helm charts, which simplify the installation process. For instance, the command helm install seldon-core seldon-core-operator initiates the installation. Once installed, the next step involves configuring the model deployment YAML file, specifying model parameters like the Docker image, the number of replicas, and resource limits.

Scaling a model deployment is straightforward with Seldon. By modifying the replicas field in the deployment YAML file, users can scale the number of model instances up or down to meet demand. Additionally, Seldon supports autoscaling, which dynamically adjusts the number of replicas based on resource utilization metrics.

For those seeking to delve deeper into Seldon’s capabilities, the official Seldon website offers extensive documentation, tutorials, and community resources. This ensures that users have access to the latest information and best practices for deploying and managing their machine learning models on Kubernetes.

Comparative Analysis of TensorFlow Serving, ONNX, and Seldon

When considering model deployment and serving solutions, TensorFlow Serving, ONNX, and Seldon each offer unique advantages and trade-offs. Understanding these differences is crucial for selecting the right tool for your specific needs.

TensorFlow Serving is designed specifically for TensorFlow models but supports other model formats with additional effort. Its main strengths lie in its seamless integration with TensorFlow, robust performance, and ease of deployment in production environments. TensorFlow Serving excels in scenarios where TensorFlow is the primary framework used during model development, offering optimized performance and scalability. However, its dependency on TensorFlow can be a limitation for teams using diverse modeling frameworks.

ONNX (Open Neural Network Exchange) offers a more flexible approach by supporting a wide range of model frameworks, including PyTorch, TensorFlow, and others. This interoperability makes ONNX a strong candidate for environments where model diversity is common. ONNX Runtime provides performance optimizations across different hardware platforms, enhancing scalability. However, ONNX’s flexibility comes with a steeper learning curve and potentially more complex deployment processes compared to TensorFlow Serving.

Seldon stands out for its comprehensive support for various machine learning frameworks and its robust integration with Kubernetes for containerized deployments. Seldon is highly flexible, supporting models from TensorFlow, PyTorch, Scikit-learn, and more. It shines in environments where microservices architecture and container orchestration are pivotal. Seldon’s scalability and ease of use in Kubernetes-native environments are significant advantages, though initial setup may be more complex compared to TensorFlow Serving.

The following table summarizes the key differences:

| Aspect | TensorFlow Serving | ONNX | Seldon |

|---|---|---|---|

| Flexibility | Low | High | High |

| Scalability | High | High | High |

| Ease of Use | High | Moderate | Moderate |

| Community Support | High | Moderate | High |

In summary, the choice between TensorFlow Serving, ONNX, and Seldon depends on the specific requirements of the deployment environment, including the need for framework flexibility, container orchestration, and ease of use. Evaluating these factors will help in making an informed decision tailored to your machine learning infrastructure needs.

Best Practices for Model Deployment

Deploying machine learning models in production environments requires meticulous planning and attention to several critical best practices to ensure smooth operation and reliability. One of the foundational practices is model versioning. Proper versioning allows data scientists and engineers to track changes, revert to previous iterations if necessary, and manage multiple models simultaneously. This practice is essential for maintaining a documented history of model evolution and facilitating collaborative work environments.

Another key best practice is continuous monitoring. Once a model is deployed, it is vital to monitor its performance in real-time. This involves tracking metrics such as prediction accuracy, latency, and throughput to ensure the model operates within expected parameters. Monitoring tools can help detect anomalies, enabling timely interventions to rectify issues before they impact end-users. Examples include setting up alert systems for deviations in key metrics and employing dashboards for visualizing model performance trends.

Maintaining model performance over time also necessitates strategies for retraining and updating models. Data distributions can shift, leading to model drift and degradation of performance. Regularly scheduled retraining with fresh data can mitigate this risk. Implementing a robust CICD (Continuous Integration and Continuous Deployment) pipeline can streamline this process, ensuring that updates are efficiently tested and deployed without disrupting service.

Ensuring the security of deployed models is another critical best practice. Models can be vulnerable to various security threats, including adversarial attacks and data breaches. Employing encryption for data in transit and at rest, implementing robust authentication and authorization mechanisms, and conducting regular security audits are essential steps to safeguard models and their underlying data. Real-world examples include deploying models within secure, isolated environments and regularly updating security protocols to counter evolving threats.

Practical implementation of these best practices can significantly enhance the robustness and reliability of deployed machine learning models. By integrating model versioning, continuous monitoring, regular performance maintenance, and stringent security measures, practitioners can ensure that their models remain effective and secure in dynamic production environments.“`html

Case Studies and Real-World Applications

In the realm of model deployment and serving, TensorFlow Serving, ONNX, and Seldon have demonstrated their capabilities across various industries, solving complex problems and delivering substantial results. This section delves into several case studies, showcasing how these tools have been effectively utilized in real-world scenarios.

One notable case involves a healthcare provider leveraging TensorFlow Serving to improve diagnostic accuracy. The organization faced challenges in processing large volumes of patient data quickly and accurately. By implementing TensorFlow Serving, they developed a robust machine learning model that could analyze medical images and patient histories in real-time. The result was a significant reduction in diagnostic errors and faster patient turnaround times, ultimately enhancing patient care and operational efficiency.

Another compelling example comes from the finance sector, where a leading bank employed ONNX to streamline their fraud detection processes. The bank needed a solution that could integrate seamlessly with their existing infrastructure while providing high accuracy in identifying fraudulent transactions. By converting their machine learning models to the ONNX format, they achieved interoperability across different frameworks and deployed the models at scale. The outcome was a marked decrease in false positives and improved detection rates, leading to substantial cost savings and enhanced security.

In the realm of e-commerce, a major retailer utilized Seldon to personalize customer experiences. They faced the challenge of delivering tailored recommendations to millions of users in real-time. By integrating Seldon into their recommendation engine, they could deploy and manage multiple machine learning models efficiently. This implementation resulted in a significant increase in user engagement and sales, as customers received more relevant product suggestions.

These case studies illustrate the transformative impact of TensorFlow Serving, ONNX, and Seldon across different industries. From healthcare to finance and e-commerce, these tools have enabled organizations to deploy sophisticated machine learning models, solve intricate problems, and achieve impressive outcomes. The success stories underscore the versatility and efficacy of these deployment solutions in addressing diverse real-world challenges.“`

Conclusion and Future Directions

In the rapidly evolving landscape of machine learning, effective model deployment and serving are critical to ensure that models yield actionable insights in real-time. This blog post has delved into some of the foremost tools in this domain, including TensorFlow Serving, ONNX, and Seldon. Each platform offers unique strengths, catering to different requirements and scalability considerations. TensorFlow Serving is noted for its seamless integration with TensorFlow models, ONNX stands out for its interoperability across various frameworks, and Seldon excels in its flexibility and Kubernetes-native capabilities.

As we look ahead, several trends and advancements are poised to shape the future of model deployment and serving. The integration of machine learning models with edge computing is gaining traction, driven by the need for low-latency and high-throughput applications. Edge deployments will necessitate more robust and lightweight serving solutions that can operate efficiently on devices with limited computational resources.

Moreover, containerization and orchestration technologies, particularly Kubernetes, are becoming increasingly central to deployment strategies. These technologies not only facilitate scalability but also enhance the reliability and manageability of model serving infrastructures. The emergence of serverless architectures and Functions as a Service (FaaS) also promises to simplify deployment processes, allowing developers to focus more on model development and less on infrastructure management.

In addition to these technological advancements, there is a growing emphasis on the ethical and responsible deployment of machine learning models. Ensuring model transparency, fairness, and accountability will be paramount as models are deployed at scale. Tools and frameworks that support these principles will likely see significant development and adoption.

Encouragingly, the field of model deployment and serving is marked by continuous innovation. Practitioners and researchers are encouraged to stay abreast of the latest developments and actively explore these tools to harness their full potential. By doing so, they can contribute to the creation of more efficient, scalable, and ethical machine learning systems.